August 25, 2013

Server Fault: Unanswered

Wget site mirroring – superuser.com

Server Fault: Unanswered

Drobo vs. Synology vs. Qnap vs. DIY – superuser.com

My Keyboard won't repeat – superuser.com

Computer Monitor Goes black When Gaming – superuser.com

Output ffmpeg to certain format – superuser.com

Is Bluestacks App Player safe? – superuser.com

Planet Debian

Joey Hess: southern fried science fiction with plum sauce

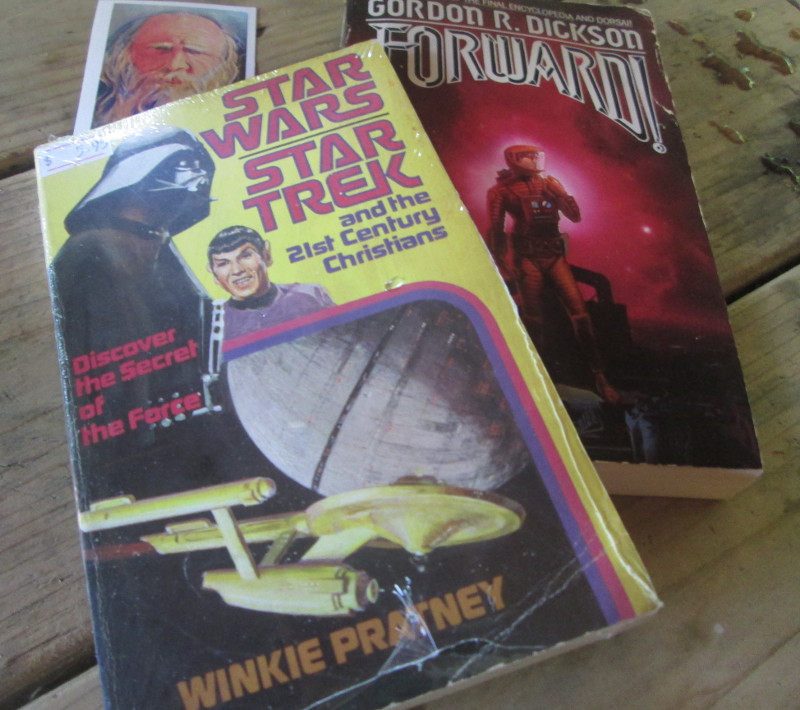

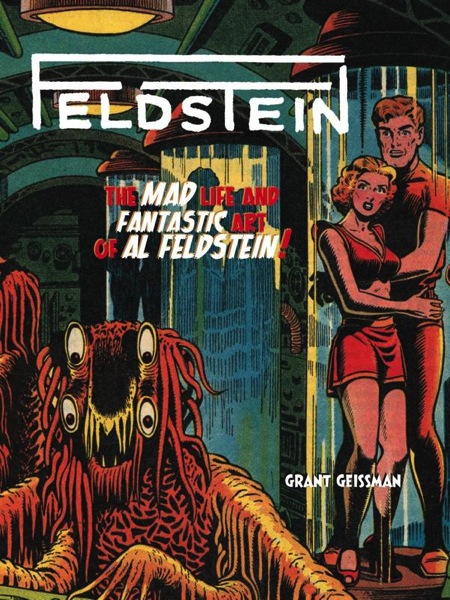

Had an afternoon of steak and science fiction. Elysium is only so-so, but look what we found in a bookstore that was half religious materials and half SF, local books, and carefully hidden romance novels:

|

Best part was at the end, when I finally found one of the local asian markets Tomoko tells us about when she casually pulls out the good stuff at family gatherings. I will be back for whole ducks, fresh fish, squid, 50 lb bags of rice, tamarind paste, fresh ginger that has not sat on the shelf for 2 months because only I buy it, etc. Only an hour from home in the woods! Between the garlic sprouts, bean sprouts, enoki mushrooms, etc that I got for $10 today and this week's CSA surprise of 18 inch snake beans and smoked pork knuckles, I have the epic stir fry potential..

Server Fault: Unanswered

Crashplan backup to a friend open source alternative – superuser.com

Excel 2010: Hyperlink excecution not possible – superuser.com

Dell insperon 545 won't power up after being switched off for 2 weeks whilst on holiday, has solid green LED but nothing else – superuser.com

MS Outlook account grows really fast – superuser.com

Programmatically changing filenames without breaking shortcuts – superuser.com

Server 2012 Essentials Remote Desktop – superuser.com

Change outgoing repo on a hgsubversion checkout – superuser.com

Boing Boing

Volkwagen Microbus to end production

Goodbye, old friend.

Jason Torchinsky at Jalopnik offers a wonderful goodbye to the faithful Volkswagen Microbus. This year marks the end of production, which continued in Brazil. The cab-over design that makes the Bus (and Vanagon) such a pleasure to drive resists meeting current safety standards.

So much of my driving experience has been rear engine, VW boxer designs. The Bus, the Beetle, the Vanagon, Porsche's 356 Speedster and 911. They are truly beautifully designed and a pleasure to drive, each within its limitations (or in the case of a modern 911, lack thereof.)

I've harbored the fantasy of buying a new Brazilian bus for years. I'm sorry to see it go.

Old-School VW Microbus Will Finally End Production This Year via Jalopnik![]()

Server Fault: Unanswered

How can I make cleartype change subpixel layout when I rotate my tablet? – superuser.com

Planet Sysadmin

Everything Sysadmin: LOPSA NJ Chapter meeting: IBM Blue Gene /P, Thu, Sept 5, 2013

It isn't on the website yet, but the September meeting will have a special guest:

Title: Anatomy of a Supercomputer: The architecture of the IBM Blue Gene /P.

IBM refers to their Blue Gene family of super computers as 'solutions'. This talk will discuss the problems facing HPC that the Blue Gene architecture was designed to solve, focusing on the Blue Gene /P model. To help those unfamiliar with high-performance computing, the talk will begin with a brief explanation of high-performance computing that anyone should be able to understand.

Mini-Bio:

Prentice Bisbal first became interested in scientific computing while earning a BS in Chemical Engineering at Rutgers University. After about 2 years as a practicing Engineer, Prentice made the leap to scientific computing and has never looked back. He has been a Unix/Linux system administrator specializing in scientific/high performance computing ever since. In June 2012, he came full circle when he returned to Rutgers as the Manager of IT for the Rutgers Discovery Informatics Institute (RDI2), where he is responsible for supporting Excalibur, a 2048-node (8192 cores) IBM Blue Gene/P Supercomputer.

Server Fault: Unanswered

Dual boot Mac with Hypervisor – superuser.com

Windows 7 : Stop showing tooltip when hovering oppened applications on taskbar and instantly show thumbnails preview – superuser.com

MySQL probelm in port: 3306 – serverfault.com

Reverse tunelling from office machine running Windows 7 for VNC, SOCKS and SSH – superuser.com

CPU Upgrade for Toshiba Satellite P205-S7476 – superuser.com

How to use new google voice searcher to write text in notepad? – superuser.com

Ctrl-D does not do what it's supposed to in Cygwin on Win7, according to C tutorials – superuser.com

HP Photosmart C6180 won't boot – superuser.com

There, I Fixed It

It's So Much Cooler Now

Server Fault: Unanswered

How to associate floating IPs to running instances? – serverfault.com

Restore From Database not showing database I want to pick – serverfault.com

Kernel module blacklist not working – serverfault.com

Planet Ubuntu

Jonathan Carter: Still Alive

I’ve been really quiet on the blogging front the last few months. There’s been a lot happening in my life. In short, I moved back to South Africa and started working for another company. I have around 10 blog drafts that I will probably never finish, so I’m just declaring post bankruptcy and starting from scratch (who wants read my old notes from Ubuntu VUDS from March anyway?)

For what it’s worth, I’m still alive and doing very well, possibly better than ever. Over the next few months I want to focus my free software time on the Edubuntu project, my Debian ITPs (which I’ve neglected so badly they’ve been turned into RFPs) and Flashback. Once I’ve caught up there’s plenty of other projects where I’d like to focus some energy as well (LTSP, Debian-Edu, just to name a few).

Thanks for reading! More updates coming soon.

Planet Debian

Gregor Herrmann: RC bugs 2013/31-34

after a break, here's again a report about my work on RC bugs. in this case, all bugs either affect packages in the pkg-perl group and/or packages involved in the Perl 5.18 transition.

- #689899 – mgetty: "Ships a folder in /var/run or /var/lock (Policy Manual section 9.3.2)"

raise severity, propose patch #693892– src:libprelude: "prelude-manager: FTBFS with glibc 2.16"

add some info to the bug report, later closed by maintainer#704784– src:libogre-perl: "libogre-perl: Please upgrade OGRE dependency to 1.8 or greater"

upload new upstream release (pkg-perl)#709680– src:libcgi-application-plugin-stream-perl: "libcgi-application-plugin-stream-perl: FTBFS with perl 5.18: test failures"

add patch from Niko Tyni (pkg-perl)#710979– src:libperlio-via-symlink-perl: "libperlio-via-symlink-perl: FTBFS with perl 5.18: inc/Module/Install.pm"

adopt for pkg-perl and work around ancient Module::Install#711434– src:libconfig-std-perl: "libconfig-std-perl: FTBFS with perl 5.18: test failures"

add patch from CPAN RT (pkg-perl)#713236– src:libdbd-csv-perl: "libdbd-csv-perl: FTBFS: tests failed"

upload new upstream release (pkg-perl)#713409– src:license-reconcile: "license-reconcile: FTBFS: dh_auto_test: perl Build test returned exit code 255"

add patch from Oleg Gashev (pkg-perl)#713580– src:handlersocket: "handlersocket: FTBFS: config.status: error: cannot find input file: `handlersocket/Makefile.in'"

add patch from upstream git, upload to DELAYED/2, then rescheduled to 0-day with maintainer's permission#718082– src:libcatalyst-modules-perl: "libcatalyst-modules-perl: FTBFS: Tests failed"

add missing build dependency (pkg-perl)#718120– src:libbio-primerdesigner-perl: "libbio-primerdesigner-perl: FTBFS: Too early to specify a build action 'vendor'. Do 'Build vendor' instead."

prepare a patch, upload after review (pkg-perl)#718161– src:libgeo-ip-perl: "libgeo-ip-perl: FTBFS: Failed 1/5 test programs. 1/32 subtests failed."

upload new upstream release (pkg-perl)#718280– libev-perl: "libev-perl: forcing of EV_EPOLL=1 leads to FTBFS on non-linux architectures"

prepare possible patches, upload one after review (pkg-perl)#718743– src:libyaml-syck-perl: "libyaml-syck-perl: FTBFS on arm* & more"

add patch from CPAN RT (pkg-perl)#719380– src:libwx-perl: "libwx-perl: FTBFS: dh_auto_test: make -j1 test returned exit code 2"

update versioned (build) dependency (pkg-perl)#719397– src:libsql-abstract-more-perl: "libsql-abstract-more-perl: FTBFS: dh_auto_test: perl Build test returned exit code 255"

add missing build dependency (pkg-perl)#719414– pkg-components: "Update to support updated Debian::Control API"

upload package fixed by Olof Johansson (pkg-perl)#719500– src:lire: "lire: FTBFS with perl 5.18: POD errors"

add patch to fix POD, upload to DELAYED/2- #719501 – src:mgetty: "mgetty: FTBFS with perl 5.18: POD errors"

apply patch from brian m. carlson, upload to DELAYED/2, then rescheduled to 0-day with maintainer's permission, then ftpmaster-auto-rejected for other reasons (cf. #689899) #719503– src:mp3burn: "mp3burn: FTBFS with perl 5.18: POD errors"

apply patch from brian m. carlson, upload to DELAYED/2#719504– src:netsend: "netsend: FTBFS with perl 5.18: POD errors"

apply patch from brian m. carlson, upload to DELAYED/2#719505– src:spampd: "spampd: FTBFS with perl 5.18: POD errors"

add patch to fix POD, upload to DELAYED/2#719596– libmouse-perl: "libmouse-perl: FTBFS with Perl 5.18: t/030_roles/001_meta_role.t failure"

upload new upstream release (pkg-perl)#719963– src:grepmail: "grepmail: FTBFS with perl 5.18: 'Subroutine Scalar::Util::openhandle redefined"

move away bundled module for tests, QA upload#719972– src:libapache-authznetldap-perl: "libapache-authznetldap-perl: FTBFS with perl 5.18: syntax error at Makefile.PL"

apply patch from brian m. carlson (pkg-perl)#720267– src:libkiokudb-perl: "libkiokudb-perl: FTBFS with perl 5.18: test failures"

upload new upstream release (pkg-perl)#720269– src:libmoosex-attributehelpers-perl: "libmoosex-attributehelpers-perl: FTBFS with perl 5.18: test failures"

add patch from CPAN RT (pkg-perl)#720429– src:mail-spf-perl: "mail-spf-perl: FTBFS with perl 5.18: POD failure"

add patch to fix POD (pkg-perl)- #720430 – src:msva-perl: "msva-perl: FTBFS with perl 5.18: POD failure"

send patch to bug report - #720431 – src:oar: "oar: FTBFS with perl 5.18: POD failure"

send patch to bug report - #720496 – src:primaxscan: "primaxscan: FTBFS with perl 5.18: POD failure"

send patch to bug report - #720497 – src:profphd: "profphd: FTBFS with perl 5.18: POD failure"

send patch to bug report #720665– src:libwww-shorten-perl: "libwww-shorten-perl: FTBFS: POD coverage test failure"

upload new upstream release (pkg-perl)- #720670 – src:aptitude-robot: "aptitude-robot: FTBFS with perl 5.18: test failures"

try to investigate - #720776 – src:bioperl: "bioperl: FTBFS with perl 5.18: test failures"

forward upstream #720787– src:libconfig-model-lcdproc-perl: "libconfig-model-lcdproc-perl: FTBFS: Can't locate Config/Model/Tester.pm in @INC"

add missing build dependency (pkg-perl)- #720788 – src:libconfig-model-openssh-perl: "libconfig-model-openssh-perl: FTBFS: Can't locate Config/Model/Tester.pm in @INC"

add missing build dependency in git (pkg-perl)

Planet UKnot

Net Into Dire Muck (an anagram of Nominet Direct UK)

I’ve written before (here, here and here) on Nominet’s proposals for registrations in the second level domain. That means you can register example.uk rather than example.co.uk. Superficially that sounds a great idea, until you realise that if you have already registered example.co.uk you’ll either have to register example.uk (if you even get the chance) and pay Nominet and a registrar a fee for doing so, or be content with someone else registering it. This is a horse Nominet continues to flog, no doubt due to its obstinate refusal to die quite yet.

I encourage you to respond to Nominet’s second consultation on the matter (they’ve made that pretty easy to do).

My view is that this is not a good idea. You can find a PDF copy of my response here. If you prefer reading from web pages, I’ve put a version below.

A. Executive Summary of Response

This document is a response to the consultation document entitled “Consultation on a new .uk domain name service” published by Nominet in July 2013. It should be read in conjunction with my specific responses to the questions asked within the document. Numbering within section B of this document corresponds to the section numbering within Nominet’s own document.

The proposals to open up .uk for second level registration remain one of the least well thought-out proposals I have yet to read from Nominet. Whilst these proposal are less dreadful than their predecessors, they remain deeply flawed and should be abandoned. The proposals pay insufficient attention to the rights and legitimate expectations of existing registrants. They continue to conflate opening up domains at the second level with trust and security. They represent feedback to a one-sided consultation as if it were representative. And, most importantly, they fail to demonstrate that the proposals are in the interest of all stakeholders.

I hereby give permission to Nominet to republish this response in full, and encourage them to do so.

B. Answers to specific questions

The following section gives answers to specific questions in Nominet’s second consultation paper. Areas of text in italics are Nominet’s.

Q1. The proposal for second level domain registration

This proposal seeks to strike a better balance between the differing needs of our stakeholders and respond to the concerns and feedback raised to the initial consultation. We have ‘decoupled’ the security features from the proposal to address concerns regarding the potential creation of a ‘two tier’ domain space and compulsion to register in the second level. We have set out a more efficient registration process to enhance trust in the data and put forward an equitable, cost effective release mechanism.

Q1.a Do you agree with the proposal to enable second level domain registration in the way we have outlined?

No, I do not agree with the proposal to enable second level domain registration as outlined.

Q1.b Please tell us your reasons why.

The reasons I do not agree with the proposal to enable second level domain registration as outlined are as follows:

In general, no persuasive case has been made to open up second level domain registrations at all, and the less than persuasive case that has been put fails to adequately weigh the perceived advantages of opening up second level domain registrations against the damage caused to existing registrants. In simple terms, the collateral damage outweighs the benefits.

Whilst it is agreed that domain names are not themselves property, in many ways they behave a little like property. Domain name registrants, whether they are commercial businesses, charities or speculators, invest in brands and other material connected with their domain names. The business models of some of these participants (be they arms companies, dubious charities or ‘domainers’) may or may not be popular with some, but a consultation purporting to deal with registration policy should not be the forum for addressing that. Like it or not, these are all people who have invested in their domain name and their brand around that domain name on the basis of the registration rules currently in place. Using the property analogy, they have built their house upon land they believed they owned. Nominet here is government, planning authority and land registry rolled into one, and proposes telling the domain name owners that whilst they thought they had bought the land that they have, now others may be permitted to build on top of them – but no matter, Nominet will still ‘continue to support’ their now subterranean houses. And for the princely sum of about twice what they are paying Nominet already, they may buy the space above their existing home. Of course this is only an option, and living in the dark, below whatever neighbour might come along is an alternative. In any other setting, this would be called extortion.

Of course there are undoubtedly good reasons to open up second level domains; were we able to revisit the original decision made when commercial registrations were first allowed in .uk, second level domains would probably not exist. However the option to revisit that decision is not open to us. Therefore, to change those registration rules Nominet needs a very good reason indeed; a reason so strong, and so powerful that it trumps the rights and legitimate expectations of all those existing registrants. No such reason has yet been presented.

Nominet claims in the introduction to its second consultation paper “It was clear from this feedback [on its first consultation] that there was support for registrations at the second level”; it does not say whether this support outweighed the opposition, and the full consultation responses have never been published. In the background paper Nominet says “The feedback we received was mixed”. In the press release after the first consultation, Nominet said “It was clear from the feedback that there was not a consensus of support for the direct.uk proposals as presented”. Nominet’s initial consultation document only told side one of the story; it presented the advantages of opening registrations at the second level without putting forward any of the disadvantages. It is therefore completely unsurprising that it found favour with some respondents particularly those unfamiliar with domain names who would not be able to intuit the disadvantages themselves, rather like a politician asking voters whether they would like lower taxes without pointing out the consequences. The second consultation is little better – nowhere does it set out the disadvantages of the proposal as a whole to existing registrants. Given this, it is remarkable how much opposition the proposal has garnered. I have yet to find anyone not in the pay of Nominet that supports this proposal, and it has managed to unite parts of the industry not normally known for their agreement in a single voice against Nominet.

For over 20 years registrations have been made in subdomains of .uk, and since 1996 that process has been managed by Nominet. Nominet claims to be a ‘force for good’ that seeks to enhance trust in the internet. Turning its back on its existing registrants that have single-handedly funded its very existence seems to me the ultimate abrogation of that trust.

The remainder of my comments on this consultation should therefore be read in the context that the best course of action for Nominet would be to admit that in this instance it has made an error, and abandon this proposal in its entirety.

Q2. Registration process for registering second level domains

We believe that validated address information and a UK address for service would promote a higher degree of consumer confidence as well as ensure that we are in a better position to enforce the terms of our Registrant Contract. We propose that registrant contact details of registrations in the second level would be validated and verified and we would also make this an option available in the third levels that we manage.

2.a Please tell us whether you agree or disagree with the proposed registration requirements we have outlined, and your reasons why. In particular, we welcome views on whether the requirements represent a fair, simple, practical, approach that would help achieve our objective of enhancing trust in the registration process and the data on record.

Validation of address information and indeed any proportionate steps that increase the accuracy of the .uk registration database are desirable. However, this is ineffective for the desired purpose (increasing consumer confidence), and in any case there is no reason to link it only to registrations at the second level.

Nominet’s logic here is flawed. Would validation of address information and a compulsory UK address for service promote a higher degree of consumer confidence? I believe the answer to this is no, for the following reasons:

Firstly, the fact that a domain name has a UK service addresses (which presumably would include a PO Box or similar) does not, unfortunately, guarantee that the content of the web site attached is in some way to be trusted. All it guarantees is that the web site has a UK service address. Web sites can contain malicious code whether placed there by the owner or by infection. Web sites with UK service addresses can sell fraudulent goods. Web sites with UK service addresses can turn out not to be registered to the person the viewer thought that they might be (see Nominet’s DRS case list for hundreds upon hundreds of examples). Nominet has presented no evidence that domain name registrations with UK service addresses are any less likely to carry material that should be ‘distrusted’.

Secondly, the registration address for a domain name is not easily available to the non-technical user using a web browser. Nominet appears to be around 15 years out of date in this area. Consumers increasingly do not recognise domain names at all, but rather use search engines. The domain name is becoming increasingly less relevant (despite Nominet’s research) as consumers are educated to ‘look for the green bar’ or ‘padlock’. This is a far better way, with a far easier user interface, to determine whether the web site is registered to whom the user thought it was. It is by no means perfect, but is far more useful than Nominet’s proposal (not least as it has international support). Nominet’s proposal serves only to confuse users.

Thirdly, the concept that UK consumers would be sufficiently sophisticated to know that domain names ending .uk had been address-validated (but not subject to further validation) unless those domains ended with co.uk, org.uk etc. is laughable. The user would have to know that www.support.org.uk is not address validated, but that www.support.ibm.uk would be address validated, which would require the average internet user memorising the previous table of Nominet SLDs. If Nominet hopes to gain support for address validation, it should do it across the board.

Fourthly, this once again means that existing registrants would be disadvantaged. By presenting (probably falsely) registrations in the second level as more trustworthy, this implies registrations at the third level (i.e. all existing registrations) are somehow less trustworthy, or in some way ‘dodgy’.

Nominet presents two other rationales for this move. Nominet claims it can enforce its contract more easily if the address is validated. This is somewhat hard to understand. Firstly, is Nominet not concerned about enforcing its contracts for other domain names? Secondly Nominet should insist on a valid address for service (Nominet already pretty much does this under clauses 4.1 and 36 of its terms and conditions). If the service address is invalid, Nominet can simply terminate its contract. Thirdly, a UK service address seems a rather onerous responsibility in particular for personal registrations, such as UK citizens who have moved abroad.

Nominet also suggests such a process would ‘enhance trust in the data on record’. This is a fair point, but should apply equally to all domain names. It is also unclear why having a foreign company’s correct head office address (outside the UK) would not be acceptable, whereas a post office box to forward the mail would be acceptable.

Q3. Release process for the launch of second level domain registration

The release process prioritises existing .uk registrations in the current space by offering a six month window where registrants could exercise a right of first refusal. We believe this approach would be, the most equitable way to release registrations at the second level. Where a domain string is not registered at the third level it would be available for registration on a first-come, first-served basis at the start of the six month period or at the end of this process, if the right of first refusal has not been taken up.

Q3.a Please tell us your views on the methodology we have proposed for the potential release of second level domains. We would be particularly interested in your suggestions as to whether this could be done in a fairer, more practical or more cost-effective way.

The release mechanism proposed is less invidious than the previous scheme in that it gives priority to existing registrants. This change is to be welcomed I suppose, though is not a substitute for the correct course of action (scrapping the idea of opening the second level up at all).

The remaining challenge is how to deal equitably with the situation where two different registrants have registrations in different SLDs. The peaceful coexistence of such registrants was facilitated by the SLD system, and opening up .uk negates that facilitation. The current proposals give priority to the first registrant. This has the virtue of simplicity.

I have heard arguments that this penalises co.uk owners, who are likely to have spent more building a brand. In particular, it is argued, this penalises owners of two letter co.uk owners, as these were released after two letter domains were released in other domains (handled under Q3.b below). To the first, the counter argument is that to prefer co.uk penalises org.uk owners (for instance); no doubt the minute there is speculation that Nominet might prefer co.uk owners, there will be an active market in registering names in co.uk that are only registered in org.uk.

Q3.b Are there any categories of domain names already currently registered which should be released differently, e.g. domains registered on the same day, pre-Nominet domains (where the first registration date may not be identified with certainty) and domains released in the 2011 short domains project?

I see no merit in treating pre-Nominet domain names differently provided the domain name holder has accepted Nominet’s terms and conditions.

I see no merit in treating domain name registered on the same day as different, provided Nominet can still ascertain the order of registration.

If Nominet cannot ascertain the order of registration, I would inform each party of this and invite evidence. If after admitting evidence Nominet still could not determine which registration was first, I would either allow an auction or choose at random.

With respect to the short domains project, I would argue Nominet has dug its own grave. Just like all of its registrants before, Nominet did not predict it was to open up .uk. For consistency, it could re-auction two letter domains in .uk. However, a simpler, fairer, and more equitable result would be to not open up .uk at all.

Q3.c We recognise that some businesses and consumers will want to consider carefully whether to take on any potential additional costs in relation to registering a second level domain. Therefore we are seeking views on:

- Whether the registrant of a third level domain who registers the equivalent second level should receive a discount on the second level registration fee;

- Developing a discount structure for registrants of multiple second-level .uk domains;

- Offering registrants with a right of first refusal the option to reserve (for a reduced fee) the equivalent second level name for a period of time, during which the name would be registered but not delegated.

Please tell us your views on these options, or whether there are any other steps we could take to minimise the financial impact on existing registrants who would wish to exercise their right of first refusal and register at the second level.

These proposals risk introducing excess complexity. The most equitable path would be not to open up registrations at the second level at all.

If, despite all objections, the second level is opened up, it is vital that the interests of existing registrants are protected. A simple and fair way of achieving this would be to allow any existing registrant (and only existing registrants) the registration of their .uk second level domain for free for four years (or failing that at a very substantial discount to the existing prices in third level domains). As this would be a single registration to an existing registrant for a single period the marginal costs would be low. This would be sufficient time for the registrant to change stationery, letterhead etc. in the normal course of events. This should be permitted through registrars other than the registrant’s existing registrar to encourage competition. Save for the altered price, this registration would be pari passu with any other.

Q4. Reserved and protected names

We propose to restrict the registration of <uk.uk> and <.com.uk> in the second level to reflect the very limited restrictions currently in force in the second level registries administered by Nominet. In addition, we would propose to reserve for those bodies granted an exemption through the Government’s Digital Transformation programme, the matching domain string of their .gov.uk domain in the second level.

4.a Please give us your views on whether our proposed approach strikes an appropriate balance between protecting internet users in the UK and the expectations of our stakeholders regarding domain name registration. Can you foresee any unintended complications arising from the policy we have proposed?

This is one of the stranger proposals from Nominet.

In essence a government programme (internal to the government) has removed the right of certain organisations to register within gov.uk. gov.uk is not administered by Nominet. I fail to see why those organisations that turned out to be on the wrong side of a government IT decision should have any special status whatsoever, especially when compared to registrants that have been Nominet’s customers for many years. I notice Nominet’s consultation does not even offer any rationale for this.

One example is independent.co.uk which is the site of The Independent (a UK newspaper). However, independent.gov.uk does not seem to be active. This is perhaps the most obvious example, but there are no doubt others. There is simply no reason why those ejected from gov.uk should have preferential treatment over domain name holders in .uk. At the very most, they should be given secondary status after existing domain name holders, but I fail to see why they can’t take their chances in the domain name market like any other organisation.

I am afraid this proposal smells like Nominet pandering for support from government for its otherwise unpopular proposal.

Q5. General views

Q5.a Are there any other points you would like to raise in relation to the proposal to allow second level domain registration?

- Nominet should abandon its current proposals in their entirety. Nominet has failed to explain why the proposals in toto are in the interests of its stakeholders, in particular the registrant community (who after all will have this change inflicted on them). Unless there is a high degree of consensus amongst all stakeholder groups in favour of the proposal, it should be abandoned. I believe no such consensus exists.

- Nominet should disaggregate the issue of registrations within .uk and the issue of how to help build trust in .uk in general. I said before that Nominet should run a separate consultation for opening up .uk, as a simple open domain with the same rules as co.uk, and Nominet has failed to do this having retained different rules for validation, address verification and price. Both consultations conflate the issue of opening up the second level domain with issues around consumer trust (although admittedly the second consultation does this less than the first). Whilst consumer trust and so forth are important, they are orthogonal to this issue.

- Nominet should remember that a core constituency of its stakeholders are those who have registered domain names. If new registrations are introduced (permitting registration in .uk for instance), Nominet should be sensitive to the fact that these registrants will feel compelled to reregister if only to protect their intellectual property. Putting such pressure and expense on businesses to reregister is one thing (and a matter on which subject ICANN received much criticism in the new gTLD debate); pressurising them to reregister and rebrand by marketing their existing co.uk registration as somehow inferior is beyond the pale. Whilst the second proposal is less invidious than the first, it is still a slap in the face for existing .uk registrants.

- Nominet should recognise that there is no silver bullet (save perhaps one used for shooting oneself in the foot) for the consumer trust problem, and hence it will have to be approached incrementally.

- Nominet should be more imaginative and reacquaint itself with developments in technology and the domain market place. Nominet’s attempt to associate a particular aspect of consumer trust with a domain name is akin to attempting to reinvent the wheel, but this time with three sides. Rather, Nominet should be looking at how to work with existing technologies. For instance, if Nominet was really interested in providing enhanced security, it could issue wildcard domain validated SSL certificates for every registration to all registrants; given Nominet already has the technology to comprehensively validate who has a domain name, such certificates could be issued cheaply or for free (and automatically). This might make Nominet instantly the largest certificate issuer in the world. If Nominet wanted to further validate users, it could issue EV certificates. And it could work with emerging technologies such as DANE to free users from the grip of the current overpriced SSL market.

- There is no explanation as to why these domains should cost £4.50 per year wholesale rather than £5 for two years as is the case at the moment. If the domain name validation process is abandoned (as it should be) these domains should cost no more to maintain than any other. Perhaps the additional cost is to endow a huge fund for potential legal action? The increased charges add to the perception that the reason for Nominet pursuing opening domains at the second level is simply financial self-interest, rather than acting in the interests of its stakeholders.

Q5.b Are there any points you would like to raise in relation to this consultation?

To reiterate the point I have made before, this consultation and its ill-fated predecessor fail to put their points across in an even handed manner. That is they expound the advantages of Nominet’s proposal, without considering its disadvantages. That is Nominet’s prerogative, but if that is the course Nominet takes then it should not attempt to present the results of such a ‘consultation’ as representative, as their consultees will have heard only one side of the story.

Server Fault Meta

Where can i get an idea other than here?

Why isnt Qmail been downloading from a version control system?

Above question of me is down voted and i think it is going to be closed

Boing Boing

This Day in Blogging History: Switching to a straight razor; Weird recycled electronics; Burning Man never gets old

One year ago today

Switching to a straight razor: I think this method delivers an insanely close shave. The best part for me is that there is almost no irritation at all. No razor burn of any kind and my face doesn't feel on fire for the next 2 hours like it did when I used a disposable razor.

Switching to a straight razor: I think this method delivers an insanely close shave. The best part for me is that there is almost no irritation at all. No razor burn of any kind and my face doesn't feel on fire for the next 2 hours like it did when I used a disposable razor.

Five years ago today

Strange stuff from a computer recycler: Seen above is a wire recorder (circa 1945-1955) that stores audio by magnetizing a reel of fine wire.

Strange stuff from a computer recycler: Seen above is a wire recorder (circa 1945-1955) that stores audio by magnetizing a reel of fine wire.

Ten years ago today

Wired News: Burning Man never gets old: "A piece I wrote on this year's edition of Burning Man, which begins today in the Nevada desert. About 30,000 are expected to attend."

![]()

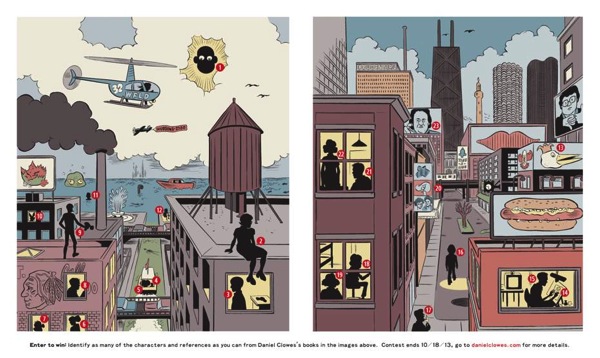

Can you identify these Daniel Clowes characters?

Can you identify all of the silhouettes in these new drawings that Daniel Clowes drew for the Modern Cartoonist exhibition murals and "Chicago Views" prints? If you can, you will have a chance to win fabulous prizes! Visit Daniel's website for details.

Can you identify all of the silhouettes in these new drawings that Daniel Clowes drew for the Modern Cartoonist exhibition murals and "Chicago Views" prints? If you can, you will have a chance to win fabulous prizes! Visit Daniel's website for details.

Guido Fawkes' blog

Coulson’s Mobile Phone Conversation Intercepted

A co-conspirator emailed on Friday:

Yesterday, I found myself walking up the Gray’s Inn Road alongside Andy Coulson. He was talking on his mobile phone to someone about the fact that his trial date had been moved. It was raining and he was mumbling a lot. But I did catch this brilliant quote:

“Whatever you do, don’t share that with anyone. Be very careful.”

I couldn’t resist papping him as he ambled along the road.

A funny thing to hear from the man who stands accused of conspiracy to intercept mobile phone voicemails, among other things. Be more careful Andy…

Tagged: Coulson, M'learned Friends, Media Guido

There, I Fixed It

Vacuum Kludge

Planet Ubuntu

Ubuntu LoCo Council: New Local communities health check process

This will be the new process, aiming to replace the current re approval process. It aims to be less formal, more interactive and above all still keep people motivated to be involved in the Ubuntu community.

Every team shall be known as a LoCoteam, teams that were previously known as an “Approved LoCoteam” shall be known as a “Verified LoCoteam”. New teams shall be a LoCoteam, teams do not have to be verified. The term Verified means that a Launchpad team has been created, the team name conforms to the correct naming standard and the team contact has signed the Code of Conduct.

Every two years a team will present itself for a HealthCheck – This is still beneficial to everyone involved, it gives the team a chance to show how they are doing and also the council can catch up with the team.

What is needed for a HealthCheck?

Create a Wiki with the activities of the period – Name the wiki page with Name of your team plus the YEAR example – LoCoTeamVerificationApplication20XX with the below details:

- Name of team

- How many people are in the team

- Link to your wiki page / Launchpad group page

- Social Networks (if they have any of them).

- Link to loco team portal page, Events page, Paste events page – this is a good reason to encourage teams to use the team portal as all of the information is there and saves duplication.

- Photo Galleries of past events.

- Tell us about your team, what you do, if you have Ubuntu members in your team, your current projects.

- Guideline of what you plan on doing in the future.

- Any meeting logs, if available.

Teams will still remain verified this is just to check in and see how things are doing, If you can’t make a meeting, it can be done over email/Bugs.

In short, the overall process should remain pretty much the same as now.

If in case of any doubts/questions regarding the new process please dont hesitate to discuss or ask us ![]()

Planet Debian

Joey Hess: idea: git push requests

This is an idea that Keith Packard told me. It's a brilliant way to reduce GitHub's growing lockin, but I don't know how to implement it. And I almost forgot about it, until I had another annoying "how do I send you a patch with this amazing git technology?" experience and woke up with my memory refreshed.

The idea is to allow anyone to git push to any anonymous git://

repository. But the objects pushed are not stored in a public part of the

repository (which could be abused). Instead the receiving repository emails

them off to the repository owner, in a git-am-able format.

So this is like a github pull request except it can be made on any git

repository, and you don't have to go look up the obfuscated contact email

address and jump through git-format-patch hoops to make it. You just commit

changes to your local repository, and git push to wherever you cloned

from in the first place. If the push succeeds, you know your patch is on

its way for review.

Keith may have also wanted to store the objects in the repository in some way that a simple git command run there could apply them without the git-am bother on the receiving end. I forget. I think git-am would be good enough -- and including the actual diffs in the email would actually make this far superior to github pull request emails, which are maximally annoying by not doing so.

Hmm, I said I didn't know how to implement this, but I do know one way.

Make the git-daemon run an arbitrary script when receiving a push request.

A daemon.pushscript config setting could enable this.

The script could be something like this:

#!/bin/sh set -e tmprepo="$(mktemp -d)" # this shared clone is *really* fast even for huge repositories, and uses # only a few 100 kb of disk space! git clone --shared --bare "$GIT_DIR" "$tmprepo" git-receive-pack "$tmprepo" # XXX add email sending code here. rm -rf "$tmprepo"

Of course, this functionality could be built into the git-daemon too. I

suspect a script hook and an example script in contrib/ might be an

easier patch to get accepted into git though.

That may be as far as I take this idea, at least for now..

Kernel Planet

Pavel Machek: Wherigo on Nokia n900 -- solved

Planet Debian

Yves-Alexis Perez: Expiration extension on PGP subkeys

So, last year I've switched to an OpenPGP smartcard setup for my whole personal/Debian PGP usage. When doing so, I've also switched to subkeys, since it's pretty natural when using a smartcard. I initially set up an expiration of one year for the subkeys, and everything seems to be running just fine for now.

The expiration date was set to october 27th, and I though it'd be a good idea to renew them quite in advance, considering there's my signing key in there, which in (for example) used to sign packages. If the Debian archive considers my signature subkey expired, that means I can't upload packages anymore, which is a bit of a problem (although I think I could still upload packages signed by the main key). dak (Debian Archive Kit, the software managing the Debian archive) uses keys from the debian-keyring package, which is usually updated every month or so, so pushing the expiration date two months before the due date seemed like a good idea.

I've just did that, and it was pretty easy, actually. For those who followed my setup last year, here's how I did it:

First, I needed my main smartcard (the one storing the main key), since it's the only one able to do operations on the subkeys. So I plug it, and then:

corsac@scapa: gpg --edit-key 71ef0ba8

gpg (GnuPG) 1.4.14; Copyright (C) 2013 Free Software Foundation, Inc.

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Secret key is available.

pub 4096R/71EF0BA8 created: 2009-05-06 expires: never usage: SC

trust: ultimate validity: ultimate

sub 4096g/36E31BD8 created: 2009-05-06 expires: never usage: E

sub 2048R/CC0E273D created: 2012-10-17 expires: 2013-10-27 usage: A

sub 2048R/A675C0A5 created: 2012-10-27 expires: 2013-10-27 usage: S

sub 2048R/D98D0D9F created: 2012-10-27 expires: 2013-10-27 usage: E

[ultimate] (1). Yves-Alexis Perez <corsac@corsac.net>

[ultimate] (2) Yves-Alexis Perez (Debian) <corsac@debian.org>

gpg> key 2

pub 4096R/71EF0BA8 created: 2009-05-06 expires: never usage: SC

trust: ultimate validity: ultimate

sub 4096g/36E31BD8 created: 2009-05-06 expires: never usage: E

sub* 2048R/CC0E273D created: 2012-10-17 expires: 2013-10-27 usage: A

sub 2048R/A675C0A5 created: 2012-10-27 expires: 2013-10-27 usage: S

sub 2048R/D98D0D9F created: 2012-10-27 expires: 2013-10-27 usage: E

[ultimate] (1). Yves-Alexis Perez <corsac@corsac.net>

[ultimate] (2) Yves-Alexis Perez (Debian) <corsac@debian.org>

gpg> expire

Changing expiration time for a subkey.

Please specify how long the key should be valid.

0 = key does not expire

<n> = key expires in n days

<n>w = key expires in n weeks

<n>m = key expires in n months

<n>y = key expires in n years

Key is valid for? (0) 429d

Key expires at mar. 28 oct. 2014 12:43:35 CET

Is this correct? (y/N) y

At that point, a pinentry dialog should ask you the PIN, and the smartcard will sign the subkey. Repear for all the subkeys (in my case, 3 and 4). If you ask for PIN confirmation at every signature, the pinentry dialog should reappear each time.

When you're done, check that everything is ok, and save:

gpg> save corsac@scapa: gpg --list-keys 71ef0ba8 gpg: checking the trustdb gpg: public key of ultimately trusted key AF2195C9 not found gpg: 3 marginal(s) needed, 1 complete(s) needed, PGP trust model gpg: depth: 0 valid: 4 signed: 5 trust: 0-, 0q, 0n, 0m, 0f, 4u gpg: depth: 1 valid: 5 signed: 53 trust: 5-, 0q, 0n, 0m, 0f, 0u gpg: next trustdb check due at 2013-12-28 pub 4096R/71EF0BA8 2009-05-06 uid Yves-Alexis Perez <corsac@corsac.net> uid Yves-Alexis Perez (Debian) <corsac@debian.org> sub 4096g/36E31BD8 2009-05-06 [expires: 2014-10-28] sub 2048R/CC0E273D 2012-10-17 [expires: 2014-10-28] sub 2048R/A675C0A5 2012-10-27 [expires: 2014-10-28] sub 2048R/D98D0D9F 2012-10-27 [expires: 2014-10-28]

Now that we have the new subkeys definition locally, we need to push it to the keyservers so other people get it too. In my case, I also need to push it to Debian keyring keyserver so it gets picked at the next update:

corsac@scapa: gpg --send-keys 71ef0ba8

gpg: sending key 71EF0BA8 to hkp server subkeys.pgp.net

corsac@scapa: gpg --keyserver keyring.debian.org --send-keys 71ef0ba8

gpg: sending key 71EF0BA8 to hkp server keyring.debian.org

Main smartcard now back in safe place. As far as I can tell, there's no operation needed on the daily smartcard (which only holds the subkeys), but you will need to refresh your public key on any machine you use it on before it gets the updated expiration date.

Vincent Bernat: Boilerplate for autotools-based C project

When starting a new HTML project, a common base is to use HTML5 Boilerplate which helps by setting up the essential bits. Such a template is quite useful for both beginners and experienced developers as it is kept up-to-date with best practices and it avoids forgetting some of them.

Recently, I have started several little projects written in C for a customer. Each project was bootstrapped from the previous one. I thought it would be useful to start a template that I could reuse easily. Hence, bootstrap.c1, a template for simple projects written in C with the autotools, was born.

Usage

A new project can be created from this template in three steps:

- Run Cookiecutter, a command-line tool to create projects from project templates, and answer the questions.

- Setup Git.

- Complete the “todo list”.

Cookiecutter

Cookiecutter is a new tool to create projects from project templates. It uses Jinja2 as a template engine for file names and contents. It is language agnostic: you can use it for Python, HTML, Javascript or… C!

Cookiecutter is quite simple. You can read an

introduction from Daniel Greenfeld. The Debian package is

currently waiting in the NEW queue and should be available in a

few weeks in Debian Sid. You can also install it with pip.

Bootstrapping a new project is super easy:

$ cookiecutter https://github.com/vincentbernat/bootstrap.c.git Cloning into 'bootstrap.c'... remote: Counting objects: 90, done. remote: Compressing objects: 100% (68/68), done. remote: Total 90 (delta 48), reused 64 (delta 22) Unpacking objects: 100% (90/90), done. Checking connectivity... done full_name (default is "Vincent Bernat")? Alfred Thirsty email (default is "bernat@luffy.cx")? alfred@thirsty.eu repo_name (default is "bootstrap")? secretproject project_name (default is "bootstrap")? secretproject project_description (default is "boilerplate for small C programs with autotools")? Super secret project for humans

Cookiecutter asks a few questions to instantiate the template

correctly. The result has been stored in the supersecret

directory:

.

├── autogen.sh

├── configure.ac

├── get-version

├── m4

│ ├── ax_cflags_gcc_option.m4

│ └── ax_ld_check_flag.m4

├── Makefile.am

├── README.md

└── src

├── log.c

├── log.h

├── Makefile.am

├── secretproject.8

├── secretproject.c

└── secretproject.h

2 directories, 13 files

Remaining steps

There are still some steps to be executed manually. You first need to initalize Git, as some features of this template rely on it:

$ git init Initialized empty Git repository in /home/bernat/tmp/secretproject/.git/ $ git add . $ git commit -m "Initial import" [...]

Then, you need to extract the todo list built from the comments contained in source files:

$ git ls-tree -r --name-only HEAD | \ > xargs grep -nH "T[O]DO:" | \ > sed 's/\([^:]*:[^:]*\):\(.*\)T[O]DO:\(.*\)/\3 (\1)/' | \ > sort -ns | \ > awk '(last != $1) {print ""} {last=$1 ; print}' 2003 Add the dependencies of your project here. (configure.ac:52) 2003 The use of "Jansson" here is an example, you don't have (configure.ac:53) 2003 to keep it. (configure.ac:54) 2004 Each time you have used `PKG_CHECK_MODULES` macro (src/Makefile.am:12) 2004 in `configure.ac`, you get two variables that (src/Makefile.am:13) 2004 you can substitute like above. (src/Makefile.am:14) 3000 It's time for you program to do something. Add anything (src/secretproject.c:76) 3000 you want here. */ (src/secretproject.c:77) [...]

Only a few minutes are needed to complete those steps.

What do you get?

Here are the main features:

- Minimal

configure.acandMakefile.am. - Changelog based on Git logs and automatic version from Git tags2.

- Manual page skeleton.

- Logging infrastructure with variadic functions like

log_warn(),log_info().

About the use of the autotools

The autotools are a suite of tools to provide a build system for a project, including:

autoconfto generate a configure script, andautomaketo generate makefiles using a similar but higher-level language.

Understanding the autotools can be a quite difficult task. There are a lot of bad documentations on the web and the manual does not help by describing corner-cases that would be useful if you wanted your project to compile for HP-UX. So, why do I use it?

- I have invested a lot of time in the understanding of this build system. Once you grasp how it should be used, it works reasonably well and can cover most of your needs. Maybe CMake would be a better choice but I have yet to learn it. Moreover, the autotools are so widespread that you have to know how they work.

- There are a lot of macros available for

autoconf. Many of them are included in the GNU Autoconf Archive and ready to use. The quality of such macros are usually quite good. If you need to correctly detect the appropriate way to compile a program with GNU Readline or something compatible, there is a macro for that.

If you want to learn more about the autotools, do not read the

manual. Instead, have a look at Autotools Mythbuster. Start with a

minimal configure.ac and do not add useless macros: a macro should

be used only if it solves a real problem.

Happy hacking!

-

Retrospectively, I think

boilerplate.cwould have been a better name. ↩ -

For more information on those features, have a look at their presentation in a previous post about lldpd. ↩

Planet Debian

Francesca Ciceri: Some things I learnt at DebConf13

People from Argentina don't like to shake hands when you first met them: it's too formal (sorry, Marga!)

Video team volunteering is extremely fun. Even directing is fun, except when the speaker decides to wander out of the talkroom just to make a point during a demo.

I won't call names, but I'm not the only one in Debian to remember how to dance the Time Warp and not ashamed to do it in public.

You can bribe a bloodthirsty deity with cheese, especially if the deity is a French one and you are in Switzerland.

A zip line is also called: tirolina (in Spanish), tyrolienne (in French) and - my all time favourite - Tarzanbahn in German, or at least in the German spoken by A. when he was a kid.

Given the number of talks about it this year, we - as a community - care a lot about newcomers, mentoring, creating a welcoming environment and community outreach in general. That's really great!

Self tagging yourself on the badge with your main interests provides conversation starters and make easier to meet new people. Many thanks to Bremner for this brilliant idea.

Cheese&Wine party is the perfect time to discuss pedagogical methods (and discover interesting projects like Sugar (desktop environment)).

During a Mao game, an extremely simple rule by a newbie can result too difficult to guess even to seasoned players. Oh the irony and the inherent democracy of that! All hail the helicopter!

Once in a while, to cook dinner for upstream and/or sit with them around a table and plan your next moves is a good idea and as well as a common practice in Community-supported agriculture.

There are people out there brave enough to stand up and declame their poems or poems they love. As a shy person, I really am in awe of them. They make a sentence like "There will be poetry" sound less threatening.

If you sleep in room 43, next door is the answer. Or the previous one, depending on the direction you're walking.

Planet Ubuntu

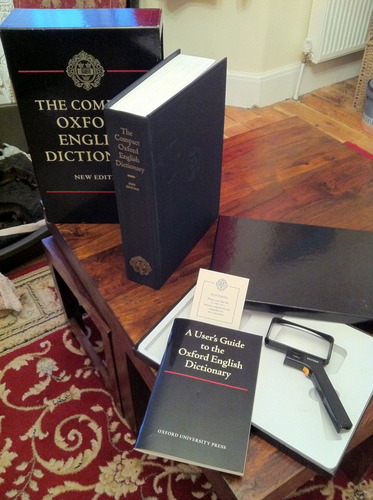

Valorie Zimmerman: The Decipherment of Linear B

Why a book on such an obscure subject? Yes, it's about Mycenaean Greek! But more important, it's about how a young person with an interest in languages and training in architecture (Michael Ventris) could use this background to crack one of the biggest mysteries revealed by modern archaeology. And he contradicted the leading experts and prevailing opinion that Linear B could not be Greek. When he proved that the Mycenaeans spoke Greek, he pushed the beginnings of European history back many centuries.

Ventris won over the experts by doing careful analysis, and always sharing his work as he proceeded. In fact, after reading all the published research, he began his work by surveying the twelve leading experts with a series of questions. This questionnaire was penetrating enough that he got ten answers. After compiling these answers and adding his own analysis, he sent out the survey results to all the experts. By getting a good grasp of current scholarship, he had built a wonderful foundation on which to build his study of the inscriptions.

Although it has not been proven that Ventris did any code-breaking during the war, his method of analysis certainly owes much to that Bletchley Park work. Also discussed here is Alice Kober, whose early work set Ventris on the right path. Her card catalogue was invaluable to Ventris, after her life was cut short by cancer. Ventris always consulted other experts, and was generous in sharing credit. He never succeeded in proving what he set out to do, which was prove that Linear B was Etruscan. Instead, he put in the hard work of analysis of all the evidence, and followed the trail to the end, proving what he started out believing impossible: Linear B is ancient Greek.

Read this book, and be inspired! Grab it used or get it from the library as I did. Although available for Kindle, the symbols render badly in that edition.

by noreply@blogger.com (Valorie Zimmerman) at August 25, 2013 09:00

Planet Puppet

The Technical Blog of James: Finding YAML errors in puppet

I love tabs, they’re so much easier to work with, but YAML doesn’t like them. I’m constantly adding them in accidentally, and puppet’s error message is a bit cryptic:

Error: Could not retrieve catalog from remote server: Error 400 on SERVER: malformed format string - %S at /etc/puppet/manifests/foo.pp:18 on node bar.example.com

This happens during a puppet run, which in my case loads up YAML files. The tricky part was that the error wasn’t at all related to the foo.pp file, it just happened to be the first time hiera was run. So where’s the real error?

$ cd /etc/puppet/hieradata/; for i in `find . -name '*.yaml'`; do echo $i; ruby -e "require 'yaml'; YAML.parse(File.open('$i'))"; done

Run this one liner on your puppetmaster, and hiera should quickly point out which files have errors, and exactly which lines (and columns) they’re on.

Happy hacking,

James

ASCII Art Farts

#5176: FEMALE BODY BUIDLER

,;;;;,

((/))))),

(((/\))))), I'M A FEMALE BODY BUILDER

((( ^.^ )))

`"\ = /"`

_/'-'\_

/`/'._.'\`\ I.E., I HAVE HUGE SELF-ESTEEM ISSUES

/ ( Y ) \ ............... AND SMALL TITS

/ /`\-' '-/`\ \

\ \ ) ( / /

`\\/\ /\//`

(/ \_/ \)

| | |

\ | /

\_ | _/

/ / \ \

| | | |

jgs |/ \|

/ \ / \

`-' '-`

by author-de@asciiartfarts.com (ASCII Art Farts: de) at August 25, 2013 07:00

Planet Debian

Matthias Klumpp: Some Tanglu updates…

Long time since I published the last update about Tanglu. So here is a short summary about what we did meanwhile (even if you don’t hear that much, there is lots of stuff going on!)

Long time since I published the last update about Tanglu. So here is a short summary about what we did meanwhile (even if you don’t hear that much, there is lots of stuff going on!)

Better QA in the archive

We now use a modified version of Debian’s Britney tool to migrate packages from the newly-created “staging” suite to our current development branch “aequorea”. This ensures that all packages are completely built on all architectures and don’t break other packages.

New uploads and syncs/merges now happen through the staging area and can be tested there as well as being blocked on demand, so our current aequorea development branch stays installable and usable for development. People who want the *very* latest stuff, can embed the staging-sources into their sources.list (but we don’t encourage you to do that).

Improved syncs from Debian

The Synchrotron toolset we use to sync packages with Debian recently gained the ability to sync packages using regular expressions. This makes it possible to sync many packages quickly which match a pattern.

Tons of infrastructure bugfixes

The infrastructure has been tweaked a lot to remove quirks, and it now works quite smoothly. Also, all Debian tools now work flawless in the Tanglu environment.

A few issues are remaining, but nothing really important is affected anymore (and some problems are merely cosmetic).

Long term we plan to replace the Jenkins build-infrastructure with the software which is running Paul Tagliamontes debuild.me (only the buildd service, the archive will still be managed by dak). This requires lots of work, but will result in software not only usable by Tanglu, but also by Debian itself and everyone who wants a build-service capable of building a Debian-distro.

KDE 4.8 and GNOME 3.8

Tanglu now offers KDE 4.8 by default, a sync of GNOME 3.8 is currently in progress. The packages will be updated depending on our resources and otherwise just be synced from Debian unstable/experimental (and made working for Tanglu).

systemd 204 & libudev1

Tanglu now offers systemd 204 as default init system, and we transitioned the whole distribution to the latest version of udev. This even highlighted a few issues which could be fixed before the latest systemd reached Debian experimental. The udev transition went nicely, and hopefully Debian will fix bug#717418 too, soon, so both distributions run with the same udev version (which obviously makes things easier for Tanglu ^^)

Artwork

We now have a plymouth-bootscreen and wallpapers and stuff is in progress ![]()

Alpha-release & Live-CD…?

This is what we are working on – we have some issues in creating a working live-cd, since live-build does have some issues with our stack. We are currently resolving issues, but because of the lack of manpower, this is progressing slowly (all contributors also work on other FLOSS projects and of course also have their work ![]() )

)

As soon as we have working live-media, we can do a first alpha release and offer installation media.

You!

Tanglu is a large task. And we need every help we can get, right now especially technical help from people who can build packages (Debian Developers/Maintainers, Ubuntu developers, etc.) We especially need someone to take care of the live-cd.

But also the website needs some help, and we need more artwork or improved artwork ![]() In general, if you have an idea how to make Tanglu better (of course, something which matches it’s goals

In general, if you have an idea how to make Tanglu better (of course, something which matches it’s goals ![]() ) and want to realize it, just come back to us! You can reach us on the mailiglists (tanglu-project for stuff related to the project, tanglu-devel for development of the distro) or in #tanglu / #tanglu-devel (on Freenode).

) and want to realize it, just come back to us! You can reach us on the mailiglists (tanglu-project for stuff related to the project, tanglu-devel for development of the distro) or in #tanglu / #tanglu-devel (on Freenode).

Planet Sysadmin

Chris Siebenmann: Adding basic quoting to your use of GNU Readline

Suppose that you have a program (or) that makes basic use of GNU Readline

(essentially just calling readine()) and you want to add the feature

of quoting filename expansions when it's needed. Sadly the GNU Readline

documentation is a little bit scanty on what you need to do, so here is

what has worked for me.

(The rest of this assumes that you've read the Readline programming documentation.)

As documented in the manual (eventually) you first need a function

that will actually do the quoting, which you will activate by pointing

rl_filename_quoting_function at. Although the documentation neglects

to mention it, this function must return a malloc()'d string; Readline

will free() it for you. As far as I can tell from running my code

under valgrind, you don't need to free() the TEXT argument you are

handed.

You must also set rl_filename_quote_characters and

rl_completer_quote_characters to appropriate values. To be fully

correct you probably also want to define a dequoter function, but

I've gotten away without it so far. In simple cases Readline will

simply ignore your quote character at the front when doing further

filename completion; I think you only need a dequoter function to

handle the case were you've had to escape something in the filename.

With a sane library this would be good enough. But contrary to what the documentation alleges, this doesn't seem to be sufficient for Readline. Instead you need to hook into Readline completion in order to tell Readline that yes really, it should quote things. You do this by the following:

char **my_rl_yesquote(const char *init, int start, int end) {

rl_filename_quoting_desired = 1;

return NULL;

}

/* initialize by setting:

rl_attempted_completion_function = my_rl_yesquote;

*/

Your 'attempted completion function' exists purely for this, although you can of course do more if you want. Note that the need for this function and its actions is in direct contradiction to the Readline documentation as far as I can tell. On the other hand, following the documentation doesn't work (yes, I tried it). Possibly there is some magic involved in just how you invoke Readline and some unintentional side effects going on.

(On the other hand I got this from a Stackoverflow answer, so other people are having the same problem.)

Note that a really good job of quoting and dequoting filenames needs a certain number of other functions, per the Readline documentation. I can't be bothered to worry about them (or write them) so far.

I was going to put my actual code in here as an example but it turns out it is too embarrassingly ugly and hacky for me to do it in its current state and I'm not willing to include cleaner code that I haven't actually run and tested. Check back later for acceptable code that I know doesn't explode.

(Normally I clean up my hacky 'it finally works' first pass code, but I was rather irritated by the time I got something that worked so I just stopped and put the whole thing out of my mind.)

Boing Boing

Second panda cub born dead

PostSecret

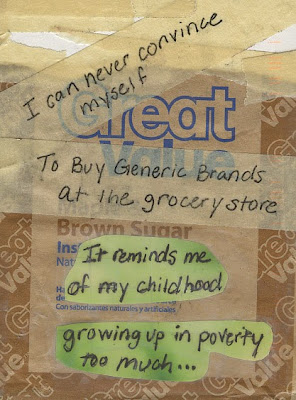

Sunday Secrets

in their secrets anonymously on one side of a homemade postcard.

PostSecret, 13345 Copper Ridge Road, Germantown, MD 20874

See More Secrets. Follow PostSecret on Twitter.

See More Secrets. Follow PostSecret on Twitter.Amazon.com Widgets

The Times of India (August 25, 2013)

Frank Warren thought it was just one of those crazy ideas when he asked people to reveal their secrets, anonymously, on his website. The number of responses he got left him and the whole world astonished...

The ones who follow the policy of taking a secret to the grave may take pride in their strength of character; but often it comes at the cost of their own happiness. Author Frank Warren knows a thing or two about the burden of keeping secrets. The American, who started a website called PostSecret in 2005 — where people can anonymously post their deepest, most embarrassing secrets — receives about 1,000 secrets every week! The website had over 100 million visitors in just the first three years. From government conspiracies (he was questioned by the FBI once) to extra-marital affairs, what makes people from all over the world want to spill the beans anonymously?

If mind doesn't express, body explodes

Psychiatrist Dr Harish Shetty says, "If our mind doesn't express, our body explodes. Secrets need an outlet and sharing secrets is incredibly freeing." It's not an easy task to keep something buried deep within. It's not about how grave the matter is, it's about the inability to share the matter that makes life tough.

Film and theatre actor Namit Das says, "Secrets can turn into a problem when they are about someone close because it may be difficult to face that person. You do not know what to do when you can neither hide the secret nor reveal it."

Relationship counsellor Dr Rajan Bhonsle explains why one inherently feels guilty about keeping a secret, "Our subconscious mind joins the battle against secrecy, and we often find ourselves telling the truth in dreams and occasional drunken disclosures. The more secretive we are, the more separate we feel from our own lives." It was a similar feeling that made model and actor Sudhanshu Pandey blurt out a secret that he had been keeping for a while, "I was keeping this secret for someone close to me, but I realised it wasn't fair to his family members. So I went ahead and shared it with them. It was quite freeing."

Keeping our own secret is the toughest

A fib here, a white lie there may be okay, but a secret becomes burdensome when it has the potential to destroy someone close to us in the long run. Warren suggests, "If we could find the right person to talk to, we might realise that talking about an embarrassing story might lead to a more authentic relationship with others, and even with ourselves."

Actor Vishakha Singh agrees, "It's easier to keep someone else's secret rather than one's own. If it's your own secret, the desire to unload is much stronger. Since our body has a strange mechanism that reacts to stress, keeping a secret can harm one physically and mentally. I experienced that kind of stress when I saw my first strand of white hair!"

In 2012's TED Talk, Warren began his discourse by saying, "There are two kinds of secrets, the ones we hide from others, and those we keep from ourselves."

According to him, sharing a secret has the potential to transform lives. He describes how. "Once I received a letter from a lady, who wrote: 'Dear Frank, do you know that I left my boyfriend of a year and a half because of someone's postcard on your website that read, 'His temper is so scary, I've lost all my opinions'?"

After reading a complete stranger's letter that mirrored her own experience, this woman found the courage to come out of an abusive relationship. That's the biggest power of sharing a secret: that we get to know that we aren't alone. That someone, somewhere is feeling exactly the same about life, and grappling with the same problems that we are, and not giving up. Just knowing that can somewhat take off the burden of a secret.

Documentary filmmaker Madhureeta Anand says, "There was an instance when I was extremely hurt by what someone had done to me. I didn't tell anyone about it. Two years later, when I couldn't bear it anymore, I told a friend and we talked about it. The weight just disappeared!"

Wrestler Sushil Kumar says he has no such worries because he simply wouldn't ever keep a secret: "I would end up telling 10 lies in the attempt to hide a secret, and get caught!"

3 STEPS TO SENSIBLE REVEALING

Is the secret troubling you?

If the secret is disturbing you emotionally, share it. Revealing it will reduce the associated guilt.

Is he/she a good confidante?

The person you are sharing your secret with should be discreet and non-judgmental.

Is your loved one likely to discover the secret?

If there are high chances of your loved one discovering the secret, consider coming clean right away. It will cause pain, but learning it from someone else will hurt your loved ones even more.

IF YOU CAN'T SHARE YOUR SECRET...

Write it down in a diary. To keep the information private, you can opt for a lock-and-key diary. This way, the secret will stay with you yet you'll feel relieved.

You can keep a 'secret jar'. If you are secretive by nature, you may have more than one thing weighing you down. You can write about them on a chit and put it in the jar. This way, you get rid of the thought without making it public.

Visit a counsellor. A professional won't judge you. He will be able to tell you the consequences of sharing your secret, to help you cope better.

"I remember a scene from Wong Kar Wai's film, in the mood for love, where the lead actor recounts an old saying that if you make a hole in a tree and whisper your secret, it remains there forever. I believe in it too"

—Namit Das, actor

"I don't keep secrets from the people who matter to me. To the rest of the world, it is not a secret, it is keeping my privacy"

—Aditi Rao Hydari, actor

"I am a true scorpion. I find it very difficult to trust people. I am quite stubborn by nature. If I plan not to tell my secret, I just won't"

—Anu Malik, music director

"I don't have the ability to keep secrets. I like to share everything with my wife and family. I would end up saying 10 lies in the attempt to hide a secret. Also, keeping a secret would take a toll on my mental health. It'll always be at the back of my mind, nagging me"

—Sushil Kumar, wrestler

"Keeping my family in the dark about something, while I know of it, would be very burdensome. It wouldn't allow me to be at peace with myself "

—Ayushmann Khurrana, actor

by noreply@blogger.com (postsecret) at August 25, 2013 02:41

Daring Fireball

Krugman on Microsoft and Apple

There’s much to quibble about in this Krugman post, but I’ll keep it short:

The Microsoft story is familiar. Back in the 80s, Microsoft and Apple both had operating systems to sell; Apple’s was clearly better. But Apple misunderstood the nature of the market: it said, “We have a better system, so we’re going to make it available only on our own beautiful machines, and charge premium prices.” Meanwhile Microsoft licensed its system to lots of people making cheap machines — and established a commanding position through network externalities. People used Windows because other people used Windows — there was more software available, corporate tech departments were prepared to provide support, etc.

Two things.

First, when we talk about the ’80s and ’90s and Apple, we’re talking about the Mac. And though the Mac suffered mightily in the late ’90s, dropping so low that it almost brought the entire company down, today, the Mac makes Apple the world’s most profitable PC maker. Even if you don’t count the iPad as a “PC”, no one makes more money selling personal computers than Apple. In the long run, Apple’s strategy paid off.

Second, Krugman is right about the fundamental difference between Windows’s success and iOS’s. The beauty of the Windows hegemony is that it wasn’t the best, and didn’t have to be the best. Once their OS monopoly was established, they just had to show up. Apple’s success today is predicated on iOS being the best. They have to stay at the top of the game, both design- and quality-wise, to maintain their success. That’s riskier.

Boing Boing

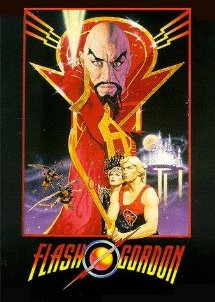

Flash Gordon (1980)

"Klytus, I'm bored. What play thing can you offer me today?"

Last year Pesco shared the classic football fight from Sam J. Jones' epic title role in the 1980 version of Flash Gordon. I've just re-watched the film and never cease to be thrilled.

Flash is one of the most iconic scifi characters of all time. An American football player thrown into a galaxy spanning adventure to save the Earth. This Mike Hodges directed version of the story has an incredible cast. Max von Sydow as Ming the Merciless, Timothy Dalton as Prince Barin and Brian Blessed playing Vultan the Hawkman are just a few of the fantastic performances.

Queen's soundtrack always gets my heart pumping and leaves me certain that Flash will save every one of us.

I’m physically disabled. I need to park as close to my destination as possible, and I need a wide parking bay with hatching either side, so I can open my doors fully in order to get in and out – if I use a regular bay and someone parks alongside me, I can’t get back into my car. Luckily, the number and sizes of these bays are specified by the Department for Transport (DfT), so I can rely on them being present when I need to shop. The specifications are detailed

I’m physically disabled. I need to park as close to my destination as possible, and I need a wide parking bay with hatching either side, so I can open my doors fully in order to get in and out – if I use a regular bay and someone parks alongside me, I can’t get back into my car. Luckily, the number and sizes of these bays are specified by the Department for Transport (DfT), so I can rely on them being present when I need to shop. The specifications are detailed

The duck boat tour just rolled under my window, megaphone-shouting that "SOMA IS THE WORST NEIGHBORHOOD IN SAN FRANCISCO!"

The duck boat tour just rolled under my window, megaphone-shouting that "SOMA IS THE WORST NEIGHBORHOOD IN SAN FRANCISCO!"

In

In

REUTERS/David Gray

REUTERS/David Gray

A new bridge in Dresden, Germany,

A new bridge in Dresden, Germany,  On the

On the

Who Needs Sally Bercow When You Have Lily Allen?

Who Needs Sally Bercow When You Have Lily Allen?

Sourcing its story on files provided by whistleblower and former NSA contractor Edward Snowden, the

Sourcing its story on files provided by whistleblower and former NSA contractor Edward Snowden, the

On the Phenomenon of Bullshit Jobs

On the Phenomenon of Bullshit Jobs It was a documentary, you know.

It was a documentary, you know.

The Huawei Tecal ES3000 is a family of full-height half-length enterprise application accelerators that leverage MLC NAND in capacities up to 2.4TB and PCIe interface (2.0 x8). On the surface the Huawei cards sound similar to many other products on the market, but a deeper look reveals a unique triple controller design that joins two PCBs together to form an impressive offering. On the top end of the performance scale this means 3.2GB/s max read bandwidth and 2.8GB/s write. From a latency angle, all thee capacities can drive 49µs and write latency of 8µs. The cards have a number of additional features as well including enhanced error checking, power fail protection and mechanisms to drive enhanced endurance over the course of their life.

The Huawei Tecal ES3000 is a family of full-height half-length enterprise application accelerators that leverage MLC NAND in capacities up to 2.4TB and PCIe interface (2.0 x8). On the surface the Huawei cards sound similar to many other products on the market, but a deeper look reveals a unique triple controller design that joins two PCBs together to form an impressive offering. On the top end of the performance scale this means 3.2GB/s max read bandwidth and 2.8GB/s write. From a latency angle, all thee capacities can drive 49µs and write latency of 8µs. The cards have a number of additional features as well including enhanced error checking, power fail protection and mechanisms to drive enhanced endurance over the course of their life.